The management of Exploration Data has been one of those interminable issues that have rumbled on in the background over decades and in particular the specific area of management of Seismic data.

The inherent features of Seismic lend itself to generating logistical problems that are immense in scope, yet aught to be simple to resolve with the appropriate application of technology and understanding of the data and use that it is put to.

The battles that have raged in this specialist area as to how to successfully bring this large information source under control and directed to the needs of users, to enhance and support their business operations and key exploration decision processes, ultimately has been fought to a standstill. The truce that now exists accepts a less than ideal position in terms of the storage, management, access and retrieval of seismic data.

Addressing whole surveys and lines is perhaps simple enough, cutting and selecting data either for internal use, analysis, interpretation or for delivery to a partner and or client remains difficult, time-consuming and prone to error. Managing this data, auditing, controlling, backup, copying and migration to new media types, still represents a time consuming and expensive, messy business, soaking up large human resources.

The industry itself has attempted to address these issues through format standardization and this has been successful to some extent, but varying flavors of SEGY can be found around the world. Media type has been equally problematic with a short-sighted view that 3590/3592 (and or USB drives) media as utilized in the field would be the media type selected for long-term data management, a solution that exacerbates the data storage issues, expanding libraries to the 100,000’s of tapes.

Re-mastering of data periodically has been a time-consuming and prohibitively expensive operation, which in many instances only serves to produce a further set of tapes to manage, where the original data sets are retained. (Few companies have the confidence to destroy their original tapes.)

Background

During the past 30 years the ability to capture large amounts of such data has grown exponentially in line with the advancement in exploration acquisition systems and associated computer technologies. Today significant budgets, measured in many millions of dollars, are allocated towards the objective of capturing this information in the field and its subsequent electronic processing and interpretation. The tools available to mange this important resource remain much as they were in the 1990’s, no real advance in data management has been seen during that time. The tired traditional approach, the status quo remains in force, mostly as a result of Data Management fatigue which has been influenced by multiple failures in the industry to design systems that can handle this data type.

Oil Companies and contractors alike have all attempted their own in-house solution, bought in third-party applications, developed work a rounds, sweated and cursed at the unyielding and protracted costs and logistics involved in arriving at a solution that works for them. Confidence in these systems is poor which results in companies preferring not to spend money in this area, where previous attempts have failed or proven to be less than hoped for.

In recent years the industry trend has been towards integrated solutions, where fast, effective data management solutions are but a pre requisite to the establishment of a Corporate or National Databank.

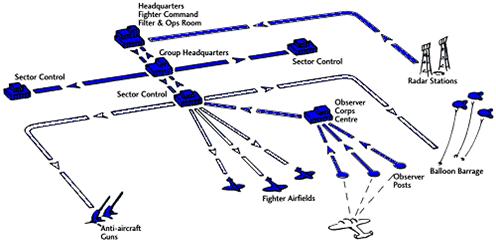

This offers the Oil Company or Contractor a full relational database system fronted by a GIS (Graphical Information System), such as ESRI’s ArcView, linked to a central store, managed by a HSM system, providing near line storage.

It was felt that such a system provides the potential to integrate all relevant Geophysical, Geological, Cultural and Spatial data, within one environment.

These all-embracing, monolithic solutions are dogged by complexity, compromise and infrastructure running costs that prove to be unacceptable and unsupportable in the long-term. Access to data remains problematic, Success has been reasonably achieved with lesser data volumes as in Well Data, Production Data, Cultural, and Topographical, but Seismic remains the Achilles heal.

Moreover despite improvements in security technology, problems remain in establishing clear divisions (Chinese walls) when establishing multi client environments, this leads to a lack of confidence where oil companies and contractors perceive a potential risk with proprietary data.

The objectives of these projects were essentially that a central repository would hold all released exploration data and are accessed by authorized users, remotely.

In practice the final solutions have been diluted to a level that is

- Technically practical

- Financially affordable

- Risk Free

The HSM systems variously utilized are configured from a number of third-party hardware and software components.

Current Status

My recent experience in this domain, when talking with a wide range of exploration contractors and oil companies, suggest that the situation has not improved. They all seek a resolution to these problems, but are constrained by infrastructure that is expensive and time-consuming to change, upgrade and or interface.

Data extraction and delivery in particular for Multi Client Data organizations (Spec Surveys) becomes a daily struggle to meet turnaround times and to establish full quality control processes that eliminate expensive and often embarrassing errors.

Many opt out of electronic data management and operate on an as need basis, selecting data from a physical store and passing this through their processing departments or outsourcing to local tape transcription vendors. This inhibits fast and accurate selection of the data, often producing confusion over what actually exists and where that data is stored. Is the data in-house, in the processing center or with the storage contractor? Either way delay and cost mount as valuable time is wasted searching for the data.

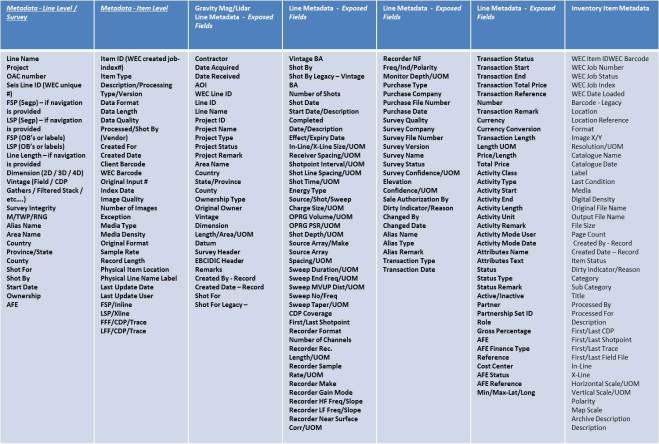

All these database systems have similarities in terms of structure, but vary in terms of the specific target or solution and vary in terms of the range of data that can be managed within the system.

In principle they consist of;

- Data Model – POSC Epicenter, PPDM or proprietary.

- An RDBMS System, usually Oracle

- A GIS Interface such as Arc View.

- An HSM – Offering near line robotics utilizing 3480/3590/3592 media.

Data Models.

In the past few years the industry has attempted to define a standard data model to facilitate the management and exchange of E&P data. Of these POSC’s Epicenter and PPDM have perhaps been the most commonly recognized.

- POSC Epicenter. Developed by the Petrotechnical Open Systems Corporation. A consortium of 45 oil companies, today POSC Epicenter is the largest Data footprint available.

- PPDM Public Petroleum Data Model.

The concept of creating a standard data model was seen as exciting and forward-looking, especially as it coincided with the DISKOS and CDA initiatives. It seemed that for the first time the industry could establish central data repositories both physical and electronic.

The potential benefits were seen as enormous, with significant cost reductions in data storage & management, data transfer and ultimately on software and data integration.

Few database systems available then were truly able to share data across applications. This caused delay, frustration and commonly data loss. Agreement by the participating oil CO’s, (45), on the Epicenter data model, was designed to enable significant and far-reaching enhancements in the industry’s ability to exchange information.

This has not been the case; POSC today is still very much a theoretical model with few examples of a full implementation of Epicenter, a higher number of “POSC compliant” implementations and the pressure, once high, to achieve standards, has fallen back to a more practical approach of establishing links and data loaders between differing data models and applications. This is the accepted face of integration.

The failure of POSC and similar initiatives to establish universally accepted standards has always been the inability of research & development technical staff and the idealist to understand the practical on the ground problems of managing data and the diverse manner in which that data is so addressed within each oil company. Thus a Data Model like POSC Epicenter becomes very much a compromise, and that compromise creates a degree of complexity which serves to undermine the flexibility and success of the solution.

By way of example Epicenter had some 17,000 relationships defined, versus some 3000 data attributes whereas the IRIS21 data model from Petroconsultants/I.H.S. had some 12000 data attributes ad 3000 relationships. in my personal view IRIS21 was a far more practical, manageable beast than Epicenter.

Outsourcing

The concept of Outsourcing is a direct result of changes within the industry, structural as well as technology based. These changes reflect the outsourcing programmes, where oil companies now feel that in response to the reduction of in-house skilled staff, they require a full service to address data management across all areas of business operations.

It is also a reflection of the desire of some companies to deal with one prime contractor, reducing manpower and reducing costs associated with data management.

Sourcing from one prime contractor has the advantage of consistency of service and quality.

The deployment of technology to support services or to generate services must offer leverage to add value, and raise margins. Information Technology, Data Management Solutions are tools, not an end in itself. These tools must serve the need of the business model to support and underpin core business operations. In this regard the technology is secondary to understanding of the data, its value and how that data can be manipulated and processed to add value to decision-making, provide opportunity for the sale, exchange of the data.

Data Management strategy is all about:

- Elimination of Risk in decision-making

- Improved Productivity through fast access to data and exchange to partners, clients processing systems and interpretation work stations.

- Adding Value by permitting the integration/exchange of data between the various sciences such as geophysics and geology. The industry trend of bringing together experts in all fields of the exploration adds value to the data by improving interpretation and analysis. This team approach has the impact of enhanced decision-making.

- Elimination of Risk by Preserving and protecting E&P data assets

Thus outsourcing data management is a concept that is well suited to today’s industry, advantages are that the service becomes an indirect cost, eliminates expensive in-house capital expenditure and infrastructure. Its success depends upon the service provider having both the technology and data management skills to implement delivery and management of the data in a consistent fashion and operating to high standards of service quality. Without the tools, without effectively establishing the data resident within the system, problems can arise, leading to delay, errors, higher costs and a level of service that ultimately can undermine the business operations of the client.

DM Strategy

DM strategy once data is acquired? or before data is acquired?

For some time now i have been evaluating, analysing the deep reasons for the failure of data management and it comes down to the simple reality, that data management strategy starts before data is acquired. That subsurface data is a single end to end flow, that commissioning new expensive 2D, 3D, seismic surveys, attempting to establish seismic arrays on the seafloor and generate 4D time Lapse seismic, all this investment is to no benefit, IF the data management, data processing systems are not fully integrated and part of the overall end to end flow, from acquisition to storage, management, processing, interpretation and back.

I have witnessed the hair pulling by business unit managers that know they acquired the data, know that with these data they can more effectively manage their Field, their Reservoir, but find it can take weeks to months to gain access to it.

Data Management strategies must exist to enable effective storage, management, retrieval and access to this data. Failure to observe this can result in significant losses in terms of opportunity to compete or have fatal impact upon key decisions about drilling activity and participation in licensing rounds, optmisation and management of existing assets.

The management of these data sets, Seismic, Well data, Navigation data are central to the ability of decision makers to interpret and analyze information when determining next areas of interest, when bidding on licensing rounds or making the final decision to start exploration drilling.

The high cost of these data in itself ensures that active data management policies must be in position to extract maximum value. Over many years the industry has attempted to develop technology solutions to enable fast access and retrieval of these data for delivery to their advanced seismic processing and interactive interpretation workstations.

Data is constantly accessed, processed, reprocessed and analyzed, the results of this work being subsequently stored for future use. Ultimately the volume of data being stored in various forms, from the original field data to intermediate and final processed data, is enormous, many Petabytes. Strategies for managing, archiving and retrieval of the data, in a timely fashion which reduces costs and time, is a business imperative.

Lessons Learnt

Much of the discussion with respect to data management has been inward looking and either directed at internal solutions or extracted from those contractors that have served the industry for past decades. Yet Data Management is not the exclusive domain of E&P, Data Management is addressed across a wide range of commercial and government organizations. Yes of course volumes may differ as indeed does data value with respect to costs of acquisition, however there are specific organizations that spend as much on data capture and have similar volumes of data to be managed.

Around these organizations solutions have been developed which in some cases exceed or surpass the kinds of technology and solutions being widely deployed in E&P today.

HSM technology for instance, which has been in use over the past 3 decades, and still today offers a limited if not primitive method of file management, has been developed to a more intelligent level, where storage and access can be both a file level and or at block/record level. Intelligent HSM solutions provide an ability to view blocks and records within a file on a virtual basis, prior to destaging to disk. This enables the user to select specific data sets and reduce the overhead, typically seen with standard HSM systems.

Tape technology has differed little between industries, the main variance being that non oil commercial industries have not been locked into solutions driven by the data acquisition process. Thus they tend to use advanced high density storage ranging up to 500 gigabytes per cartridge.

However the major shift has been towards moving data off tape based media to enable a once and for all transfer to low-cost, high density near line disk based storage.

The Cloud & Seismic Data

The cloud is a fact of life that has become a buzz word in the area of Big Data. Evaluating and determining if Seismic fits into this technology is one issue that has preoccupied my thinking over several years.

My experience in this sector leads me to believe that the Cloud may not be a suitable or sensible option for the management of seismic binary data.

Yes of course I accept that any data management solution, any technology needs to, should be able to connect and communicate with cloud technologies, where appropriate. However I have identified a number of issues that need to be carefully factored in to a cloud based strategy with respect to Seismic Binary Data.

The areas identified included:

- Security –Would any oil company place their sensitive exploration data assets in the cloud? Are the risks justifiable?

- Scale – Can the cloud actually offer a storage capability that scales with respect to data volumes and price performance with respect to seismic binary data? Big Data is generally understood to mean terabytes to petabytes of small files/records, millions of them, whereas Seismic data is again Terabytes to petabytes of data, one client we are addressing has 6 petabytes of data, which is relatively small. Each of the files that make up these data sets are Gigabytes in size, they are by no means small records or files. So scale, price performance does become an issue.

- Data Integrity– Cloud based solutions utilize a data compression algorithm which is perfectly satisfactory for unstructured data, transitional data, Meta data, seismic indexes and spatial data. However Seismic Binary Data, if compressed can result in significant data loss, clipped amplitudes which can deliver substandard results and an overall degradation of seismic data, rendering the data less than acceptable for the user, Geo-scientist.

Today some software houses have designed and built their own seismic processing, data management tools that indeed includes data compression. These tools are being promoted as one way to increase performance and enable electronic transfers of otherwise very large data sets, across Internet, Intranet, Fibre Channel and offer faster and closer collaboration with the Geoscientists. The tools include excellent seismic visualisation of 2D and 3D data sets, time slices and so forth. They also include unique tools that can enable the user to choose the level of data compression that they feel is appropriate. A sliding bar allows anything from zero to 100% compression.

The first question and most obvious is why? why would you need a sliding bar to establish the best compression ratio, if you were confident that data compression has no impact and of course the question answers itself. It may well be that some limited data compression can be tolerated, if the advantages are speed, delivery, accessibility.

However once compressed, there is no going back. Data Compression inevitably leads to clipped amplitudes and clipping amplitudes has a knock effect across the seismic processing sequences, including velocity analysis. It is possible of course to reconstruct amplitudes, cubic spline algorithms and trace following interpolation routines. However in my 40 years I have yet to see any Oil co knowingly degrade their data sets. One would anticipate that any such resulting data set would be carefully annotated and marked as such.

4. Nosql – Inherent with most Cloud based solutions is the acceptance and use of Nosql as being the primary engine within which queries and transactions take place. NoSql has many performance and scalability advantages, but as with all technology, it also has very specific disadvantages. In the case of BIG DATA where this is seen as Variety, Volume and Velocity, these specific type CRM data fit well into the NoSql world and mostly these data types are generated on the fly, have very little specific value, is transactional data, which when collated together offer some value to a retail store, an airline passenger preferences profile or predictive footfall in some major shopping mall.

Binary Data – However the specific disadvantages relate to the query and transactional process, where with BIG Data completion of such may be of no consequence, but would potentially degrade subsurface, sinusoidal binary data. The loss of a sample or two may be no big deal the loss of multiple traces would be. Data loss, Stale Reads and incomplete transactions all serve to question the wisdom of using a NoSql solution for Subsurface Binary Data.

5. Risks – The cloud offering entails substantial price/performance, Data Security, Data Integrity and Scale based volume risks.

Taking all the above into account and based on the collective subject matter expertise that SDA has with respect to these data and their life cycle, a data management solution needs to overcome not just the legacy of past generation hardware and software, but also to become complimentary to and with newer technologies such as cloud based solutions.

It therefore seems there maybe a number of significant advantages that exist within the cloud and within the more focused and bespoke data management solutions. The Cloud manages BIG DATA most effectively where these data are in fact, more text-based, Meta data, Indexes, Unstructured Data and Spatial data structures.

However, where scientific Binary based, continuous Seismic and Well trace information is concerned, the probability is that scale, cost, performance and data integrity issues will arise that degrades the quality of these very important data sets.

Therefore it seems likely that specific, targeted and focused data management solutions can act as a bridge between the “cloud” based offerings and more specific, bespoke solutions that are designed to scale and offer high quality, granular access to the scientific, binary, signal data sets that we address in the world of subsurface data management.

The Cloud offers many advantages with respect to scale, price/performance and accessibility for the so-called “Big Data” arena; however Seismic data is a data type that does not easily fit the criteria and does require more specific, dedicated data management structures and functionality to be of use to the end-user community. These solutions need to be the province of Subject Matter Experts, familiar with the data types and fully appreciative of their value, their structure, their life cycle and ultimate purpose. This is not something that I.T. Big Data Gurus can be expected to address. It is not CRM data.

Jeffrey Anthony Maskell – CEO Seismic Data Analytics limited.

jmaskell@seismicanalytics.com

www.seismicanalytics.com

Seismic Data Analytics is a limited company registered in England and Wales.

Registered number: 9671609

Copyright © 2017